Original Entry(ja) is here.

Supporting service principals with Azure DataLake Store connector that was also open to LogicApps Live, this is not a "sign in with account", but by registering a dedicated application in Azure AD and granting authority , It does not correspond to a method that enables access that is not individual for each user. In the previous individual user's individual method, why it did not work out, but since it was supported principally and managed somehow, I tried it together.

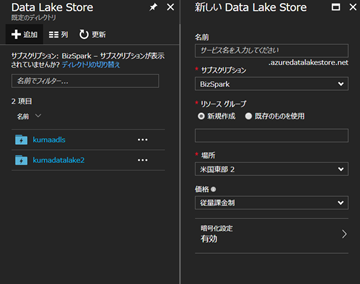

DataLake Store is a large-scale data retention service provided by Azure regardless of structured / unstructured and data type. By uploading various data here, it is possible to link with various data analysis services such as DataLake Analytics. In order to use it, you need to create a DataLake Store account, so we will create it from the portal.

Currently, only three regions are available: "Eastern US 2", "Central America" and "North Europe". In the future I think that it will spread to other regions, and because of the nature of DataLake Store, I think whether to ask for the response time so far so I do not think you should worry too much at this point. In the DataLake Store, the service name specified first is treated as "account name". In the screenshot above, "kumaadls" or "kumadatalake 2" corresponds to it, and it is also used for designating the later principal information.

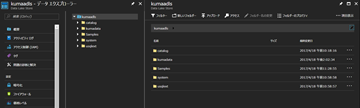

In the Data Explorer, you can create new folders and upload and download files directly. If you put the DataLake Store in a state where it can be used like this, you can use various kinds of operations with LogicFlow side connector.

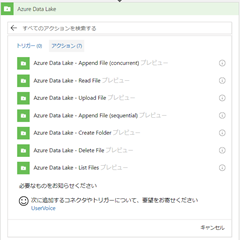

Currently provided functions are as above, there are no triggers, seven actions are prepared.

If you use for the first time, you will be asked to sign in as above, but there is "Connect using service principals" in the lower left, so we will connect here from this time.

When using a service principal, you will be prompted for the above. The connection name is for recognizing on this LogicFlow and it may be appropriate. The Client ID is displayed when you register the application with Azure AD. Client Secret needs to register password-like information as information of the application, but its value. Tenant ID is a value that can be checked from the properties of Azure AD.

For application registration in Azure AD and various values such as Client ID, please see before We think that it will be helpful, please also try this.

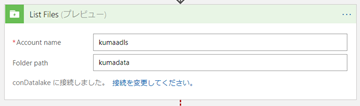

Once you have configured them and you can create a connection, the DataLake Connector becomes available.

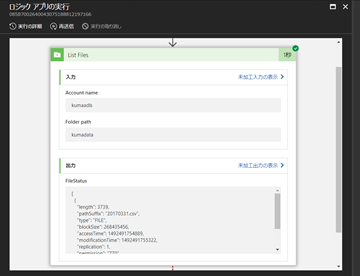

The above is to acquire the list of files that exist in the specified folder in one of the actions, List Files. Account Name indicates the service name set in DataLake Store, and folder path indicates the path you want to extract. At this time, the leading \ representing the root folder is unnecessary.

If the specification is correct, you can get the list of files in the folder like this. Well, about the point of note in the title, I have confirmed that there is one problem in this folder designation place.

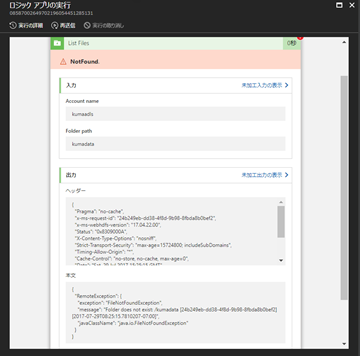

Although this is the state when it was settled at the point of attention, the value specified as the case of success example is exactly the same. However, as a result, java.io.FileNotFoundException is returned and you will see that it is an error that the target folder does not exist (I was slightly surprised that it was developed in java) This is a bug that which can not be acquired with the current connector if capital letters are included in the folder name of the Azure DataLake Store . As I saw it seems that it is working to convert the folder name passed on the connector side to lower case like To Lower and get it from the DataLake Store.

I can agree if it is possible to use only lowercase letters in the folder name in the DataLake Store, but you can use uppercase letters as usual, so I think that this is a malfunction on the connector side.

Although there are such problems, for now it is avoidable unless you use capital letters in folder names and file names.

It will be possible to upload more and more data to the DataLake Store with LogicFlow, so for example with Flow you can do something every day with OneDrive, CDS, or other services on a DataLake Store It will also be easy to accumulate it even in. Currently there is still no way to call the REST API directly to use DataLake Analytics, but still I think that it has made it much easier to aggregate data on a larger scale than before.

0 件のコメント:

コメントを投稿